Spherical Lights

As mentioned in the previous post I've decided to start looking at rendering again to help me learn a new programming language, Rust. I initially started with just point light sources but I have recently added spherical light sources too. Why bother? What is the difference?

Point vs. Spherical Lights

In the real world there are no point light sources, everything has a volume. If the source is small or far away then it is approximately a point source. When a point source projects light it hits everywhere with the same amount of light. (Ignoring attenuation) This means when an object casts a shadow the part in shade gets no light at all, the part in the light gets the full amount.

However when a light source has a volume that is no longer true. Each part of the surface will be emitting light. This alters shadows; the amount of light received at each point is now going to be related to the amount of light source that is visible. If the source is totally obscured then no light will be received. If the source is totally visible then the full amount will be received. In between a variable amount will be received. This leads to soft shadows - instead of the shadow going sharply from 0% light to 100% there will be a smooth gradient instead.

Adding to the Ray Tracer

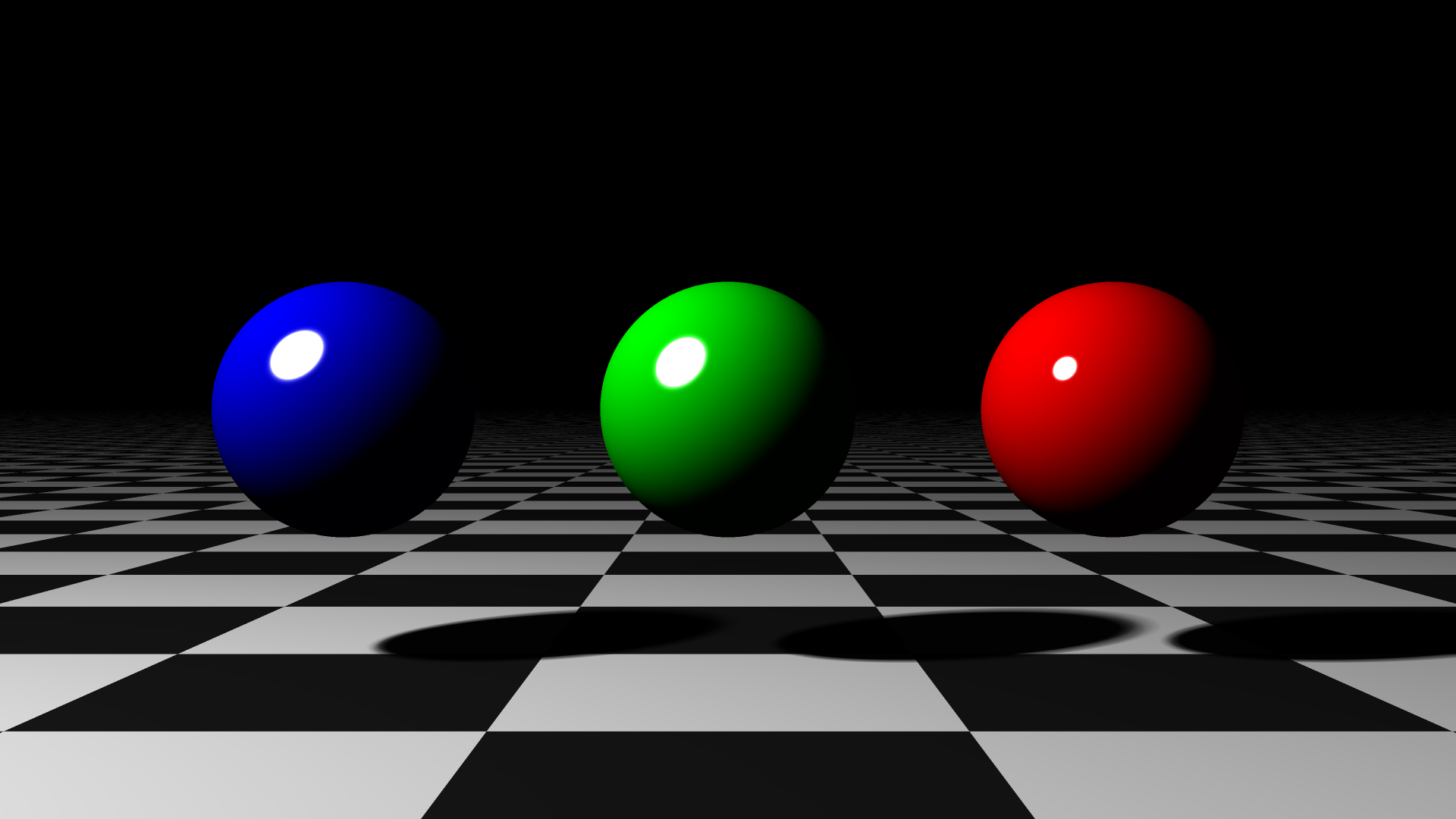

How do we add this to the ray tracer? We could calculate the amount of the light visible at each point, however this could get very complicated very quickly, especially for multiple complex shapes in front of the light. And easier approach is to sample points on the surface of the light and treat each one as a point light. We can then average the results from each separate points to get our approximation. Adding this to our ray tracer gives us the soft shadows we're looking for:

How do we sample the points on the surface of the sphere? I've added two methods, random and uniform. With both methods we will want to ensure that the points are evenly distributed over the surface.

Random Sampling

For the random method we can use spherical co-ordinates to ensure the even distribution, provided we already have a generator that can give us random numbers in the interval \([0,1\)). Spherical co-ordinates are defined in terms of radius \(r\), polar angle \(\theta\) and azimuthal angle \(\phi\), where \(\theta \in [0, 2\pi)\) and \(\phi \in [0, \pi]\). These map to normal Cartesian co-ordinates via the formulae \(x = r sin \theta cos \phi \), \(y = r sin \theta sin \phi \) and \(z = r cos \theta \).

Whilst we can map our uniform numbers to \(\phi\) quite easily we cannot do the same to \(\theta\). We can think of our spherical co-ordinates as giving us circles around the sphere in the \(xy\) plane. As we move towards the poles these circles become smaller, so including the same amount of points for each circle would cause more points to be distributed nearer the poles. Instead we apply our uniform distribution to \(z\) over the range \([-r,r]\), and map back to \(\theta\) using the formula above. This gives us the following formulae for two random numbers \(A\) and \(B\):

\[\theta = 2 \pi A

\\ \phi = cos^{-1}(2 B - 1)\]

Uniform Sampling

There are many methods to uniformly map points over a sphere but one of the most popular is the Fibonnaci Sphere algorithm. This utilises a property of the Golden Angle (which is defined from the Golden Ratio and therefore strongly related to the Fibonacci Sequence, giving the algorithm its name) often found in nature that if you step around a circle incrementing by the Golden Angle each time then the overall distribution of points will be approximately equal, with 2 or 3 points being added each revolution.

Once we have our distribution around a circle for \(n\) points we can easily map to a sphere using the same approach for mapping to \(z\) above, except this time we uniformly distribute the points. This gives us the following formulae for point number \(i\) when generating a total of \(n\) points:

\[\theta = G_ai

\\ \phi = cos^{-1}(1 - \frac{2i}{n})\]

Number of Samples

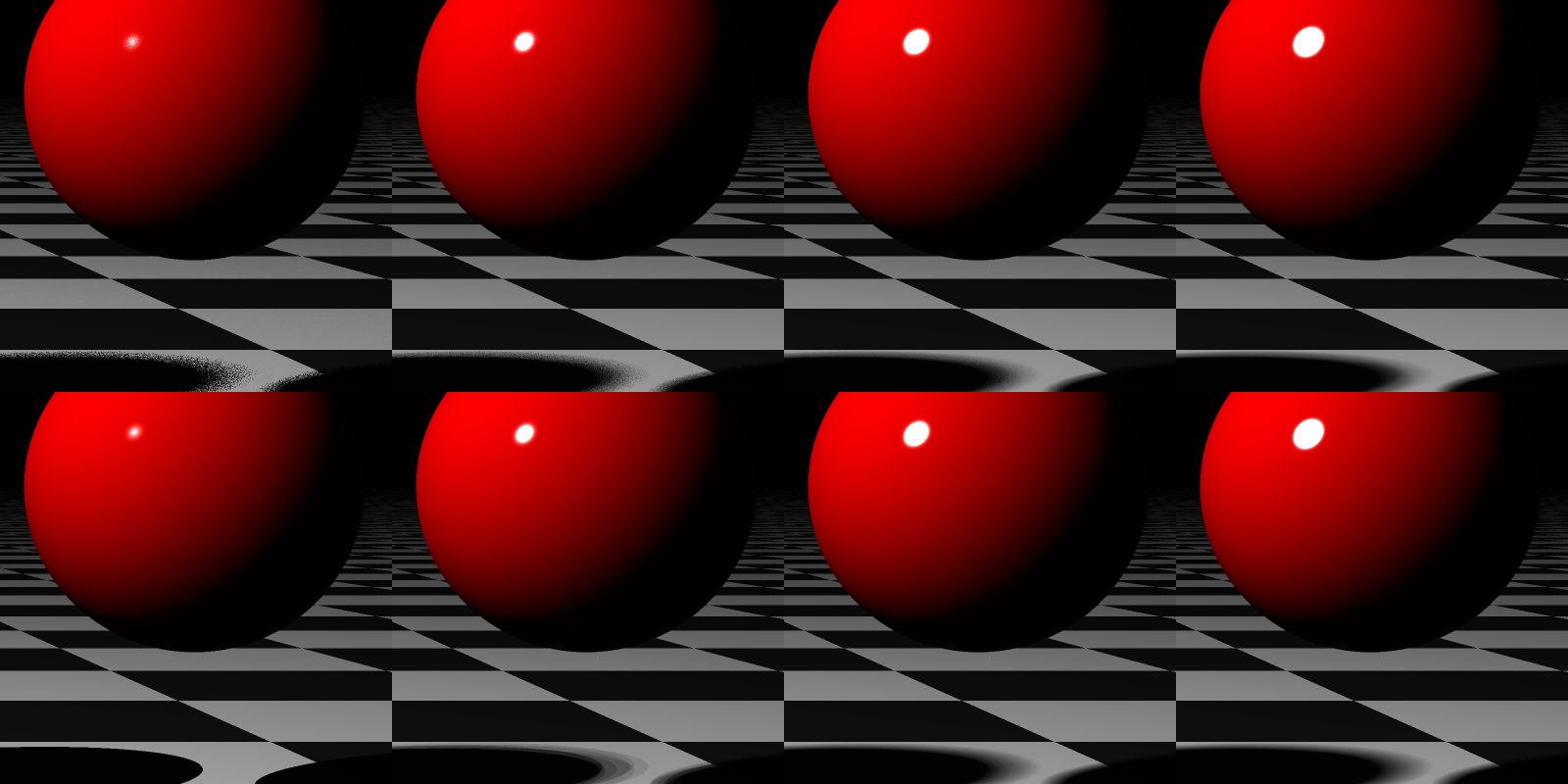

This sampling increases the time it takes to render a picture. For each ray intersection we are now having to trace multiple rays back to each spherical light rather than just a single one. The more samples we do the more accurate our picture will be, but the longer it will take to render. The following images shows a section of the scene above rendered with the two different methods for a range of sample:

A few things to note:

- Both are pretty much the same with 1,000 samples.

- For fewer samples random gives a speckled pattern, whereas uniform gives a banded pattern. This is because uniform locks the points in place giving the same positions for every intersection, whereas random changes them each time. If you cache the random positions and use the same set each time then that produces bands too.

- A single uniform point is equivalent to a light source, however random sampling with a single point still gives some soft shadow due to the position of the random point being different for every intersection.

- The size of the specular highlight also increases with more samples.

Potential Improvements

- If the light was directly in view of the camera it wouldn't show up in the image as I'm treating lights separately to objects. A workaround would be to add a spherical object at the same place that doesn't have shading and just gives the colour of it's light on intersection.

- The number of samples could be dynamic depending on how far away the light is from the intersection and its attenuation to reduce rendering times. After all stars are massive but appear as points to us.

- I'm currently sampling over the entire sphere meaning the light from the far intersection is also being included as if it passed clean through the light. Really it should be the hemisphere facing towards the intersection only.

For the interested the source code can be found at https://github.com/MrKWatkins/rust-rendering.